Prerequisites

To understand this article, you should be familiar with:

- Basic knowledge of operating systems.

- How

Futureworks in Rust. - The state machine generation behind

asyncandawait. - How

spawnandblock_onwork in Rust asynchronous runtimes. - File descriptors in Unix-like systems.

- The workings of

select,pollandepollin Linux.

What is io_uring?

In Linux, system calls like read are typically used to interact with files, sockets and other I/O devices. These system calls are synchronous, which means the calling thread is blocked until the operation completes. While this design simplifies programming models, it can lead to inefficiencies, especially when dealing with high-performance or latency-sensitive applications. In such cases, we often need mechanisms that allow the calling thread to continue executing while the I/O operation is in progress. This need gave rise to asynchronous I/O mechanisms.

Initially, mechanisms like select, poll and epoll were introduced to address this issue. These mechanisms notify the user space when a file descriptor becomes ready for I/O, but the actual reading or writing still requires synchronous system calls after receiving the notification. Although they reduce idle wait times, they don’t completely eliminate the overhead associated with synchronous calls.

This is where io_uring stands out. io_uring is a high-performance asynchronous I/O framework that enables system-call-like operations to be executed without blocking the calling thread. By leveraging io_uring, applications can achieve greater efficiency and scalability.

Here are some key features of io_uring:

- Reduced User-Kernel Transitions: Unlike traditional system calls,

io_uringminimizes user-kernel context switching, which significantly improves performance by reducing overhead. - True Asynchrony: Operations initiated via

io_uringdo not block the initiating thread. While the operation executes in the background, the thread can continue performing other tasks, which is especially beneficial in multi-threaded or event-driven systems. - Batching Capability:

io_uringallows multiple operations to be submitted together in a batch, reducing the cost of frequent submissions and improving throughput. - System-call-like Operations: The

io_uringAPI closely resembles traditional system calls, making it easier to integrate with existing codebases.

How io_uring Works

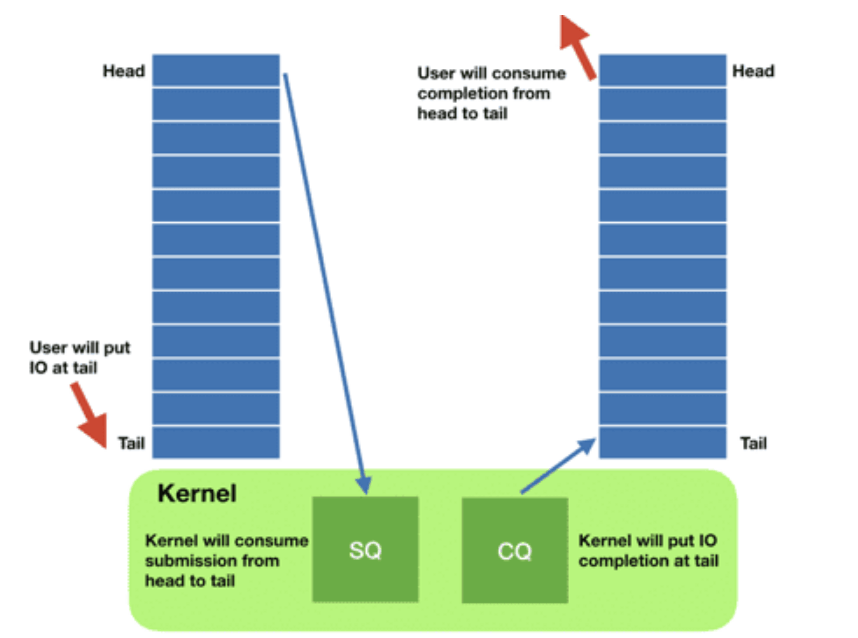

The io_uring framework is built around two primary ring buffers managed by the kernel:

- Submission Queue (SQ): This is where user space enqueues operations for the kernel to execute. It holds Submission Queue Entries (SQEs) that describe the requested operations.

- Completion Queue (CQ): This is where the kernel posts the results of completed operations. It contains Completion Queue Entries (CQEs) with details of the operation’s outcome.

These queues follow a single-producer, single-consumer model, which ensures efficient data transfer between user and kernel spaces.

Here’s step-by-step how io_uring works:

In User Space:

- The application begins by creating and registering a ring buffer with the kernel. This ring buffer contains both the Submission Queue and Completion Queue.

- The user constructs a Submission Queue Entry (SQE) that describes the operation to perform. This could be a file read, write, or another supported operation.

- The constructed SQE is then pushed to the Submission Queue.

- The user updates the tail pointer of the Submission Queue to notify the kernel about the new entry.

- The application waits for results in the Completion Queue. This waiting can be active or passive depending on the application’s design.

- Once the operation completes, the application retrieves the corresponding Completion Queue Entry (CQE) and processes the result.

In Kernel Space:

- The kernel retrieves the SQE from the Submission Queue and validates it.

- The kernel executes the operation described by the SQE. This could involve reading data, writing to a file, or another supported action.

- After completing the operation, the kernel posts a CQE with the result to the Completion Queue, allowing the user space to process the outcome.

By avoiding unnecessary system calls and reducing the need for user-kernel transitions, io_uring achieves remarkable efficiency.

Using io_uring with Rust

The io-uring crate provides a Rust-friendly interface for leveraging io_uring. Below is an official example of using it to perform asynchronous file reads:

| |

Here are the key points to note in this example:

- The

read_eoperation mimics thereadsystem call but is executed asynchronously by the kernel. - The

user_datafield allows you to tag operations, making it easier to associate CQEs with their corresponding SQEs. - Ensure safety and validity when interacting with the submission queue, as invalid entries can lead to runtime errors. For instance, attempting to read from an invalid file descriptor would result in an error being posted to the Completion Queue.

This example demonstrates how io_uring enables non-blocking file reads with minimal overhead, making it an excellent choice for performance-critical applications.

io_uring in Rust’s Asynchronous Runtime

Using io_uring directly in an application can be cumbersome. Instead, it’s often integrated into a runtime to simplify asynchronous I/O operations.

Actually, I am developing a runtime based on io_uring in Rust, here is how it works conceptually:

Polling Futures

When polling a Future:

- The first poll creates an SQE and pushes it to the Submission Queue. At this point, the operation is initiated but not yet complete.

- The poll function returns

Poll::Pending, indicating that the result is not yet available. - The executor continues polling the

Future. It keeps returningPoll::Pendinguntil the corresponding CQE is available in the Completion Queue. - Once the operation completes, the

Futureretrieves the result from the CQE and returnsPoll::Readywith the operation’s outcome.

Tracking Execution State

Here is a simple way but not best practice to track the execution state of an operation:

- A shared memory structure is used to store operation results. This memory is accessible by both the executor and the

Future. - Each operation is tagged with a unique identifier using the

user_datafield of the SQE. This allows the CQE to be matched with its corresponding operation. - When a CQE is retrieved, the shared memory is updated with the result, enabling the

Futureto access the completed operation’s data.

Submission Strategies

When should SQEs be submitted to the kernel?

- Immediate Submission: Submit the SQE as soon as it is pushed to the queue. This reduces latency because the operation is sent to the kernel without delay. However, it increases CPU usage due to frequent submissions.

- Deferred Submission: Submit SQEs in batches when the executor is idle or when a threshold is reached. This strategy reduces the cost of frequent submissions, improving overall efficiency. However, it may introduce slight delays in processing.

For high-performance applications, the second strategy is generally preferred as it strikes a balance between latency and resource utilization.

Conclusion

io_uring is a game-changer for asynchronous I/O in Linux. By understanding its mechanisms and integrating it with Rust’s async ecosystem, you can build efficient and scalable applications. Whether you are developing a runtime or simply optimizing I/O operations, io_uring provides the tools needed to achieve cutting-edge performance.